flōt Autonomous Blimp Robot

Hello! I am a MASc candidate in Mechanical and Industrial engineering in the ASBLab under the supervision of Professor Goldie Nejat at the University of Toronto. I completed my undergraduate degree at the University of Waterloo obtaining a BASc in Mechatronics Engineering. My research focuses on the use of computer vision to enable robots to intelligently navigate complex environments. This has led to research in both end-to-end navigation approaches and decomposed approaches relying on object detection methods.

ASBLab

Research Assistant

-

NVIDIA

Machine Learning Software Engineering

-

NVIDIA

Graphics Software Engineer

-

Agfa Graphics

Computer Engineer

-

Wind Energy Group at the University of Waterloo

Wind Energy Research Assistant

-

flōt Autonomous Blimp Robot

Apprenticeship Learning via Inverse RL

Control and Localization of a Jumping Robot

Deep Generative models

Reinforcement Learning with Deep Q Nets

Meta Learning (Learning to Learn)

University of Toronto

Master of Applied Science Candidate, Mechanical and Industrial Engineering

-

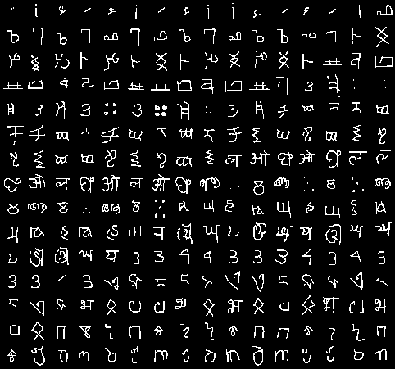

Research related to artificial intelligence, computer vision, optical character recognition, weakly supervised learning, meta learning, and robotics.

University of Waterloo

Bachelor of Applied Science, Mechatronics Engineering

-

Focus in robotics, artificial intelligence, and computer vision.

Apprenticeship Learning via Inverse RL

For my Markov Decisions Processes class we (myself and two of my lab-mates) presented the apprenticeship learning via inverse reinforcement learning algorithm. To aid with the explanation of the method, we implemented the method using two student types, a tabular method and a double deep Q network. To provide the required teacher signal we trained experts using a known reward signal. The features gathered by the expert were then used within the apprenticeship learning algorithm. A more detailed explanation of the methodology can be found in our presentation on the topic.

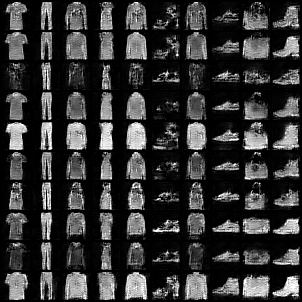

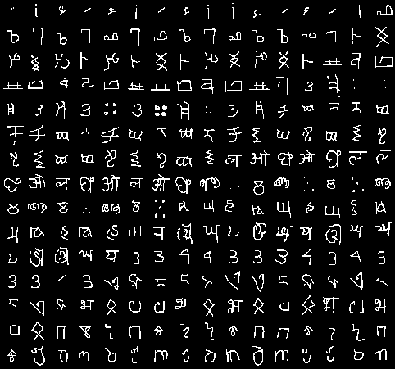

Deep Generative Models

I think that generative models have a lot of applicability in the future of neural networks designed for the use with not only robots but many AI tasks in general. I was particularly interested in their ability the explicitly model intra-class factors of variation creating regions of latent space that can generate images within the same class and intuitive factors of variation. In particular, these properties seem to be complementary to those desired and trained for in metric-learning, that is to ensure similar inputs map closely within network's latent space. I therefore implemented several deep generative algorithms including GANS and VAEs following CS236.

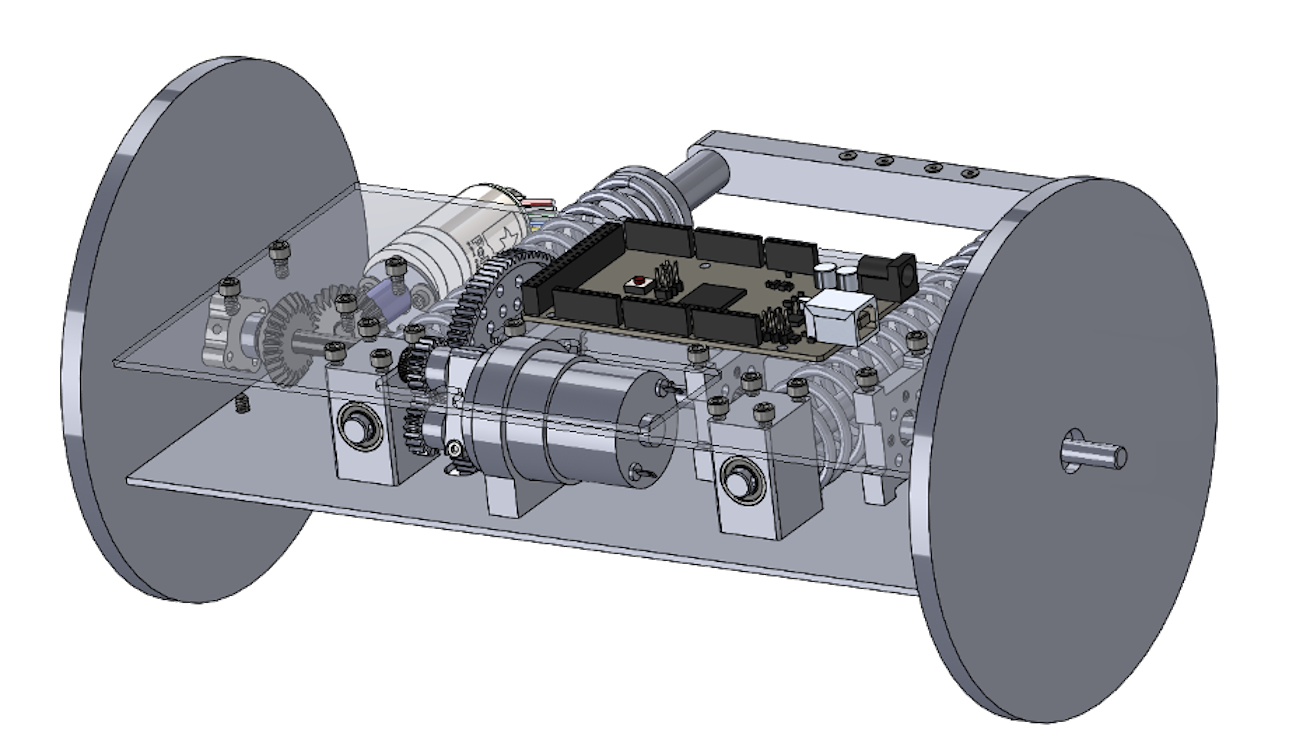

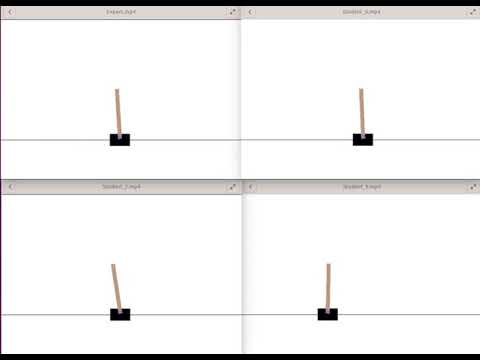

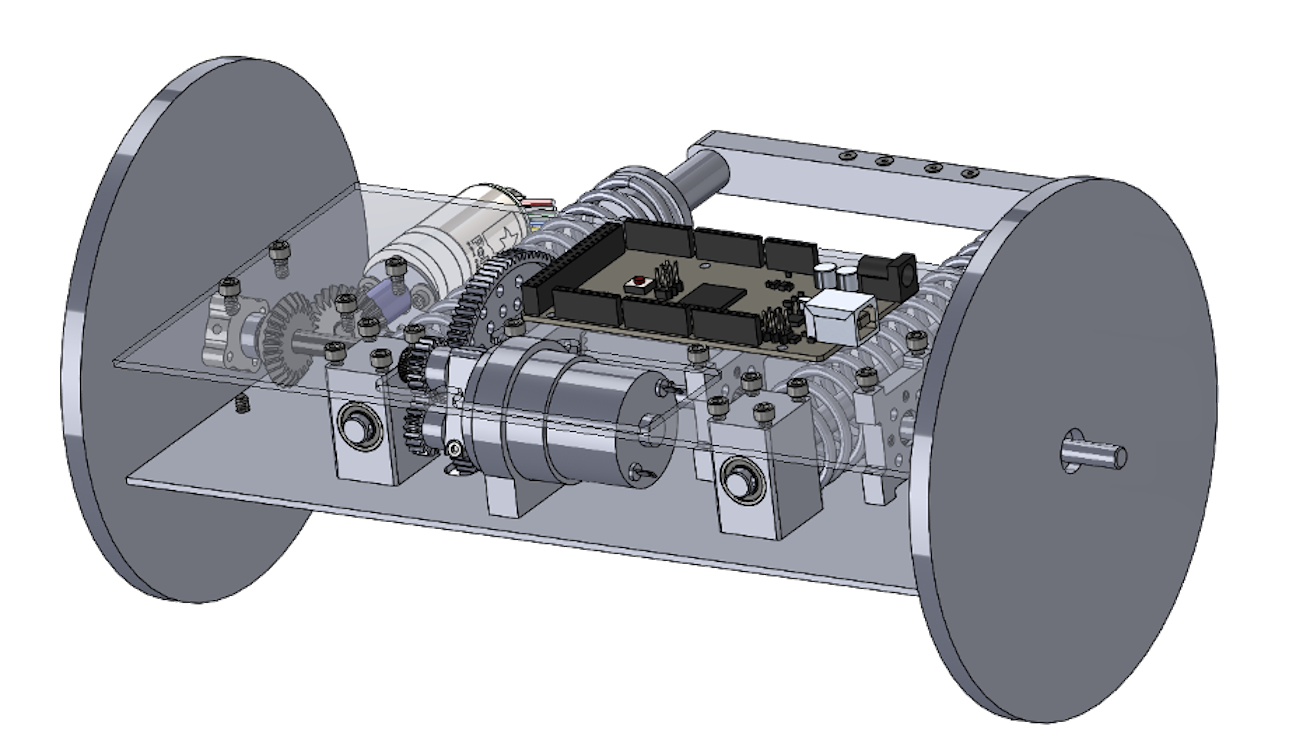

Jumping Robot

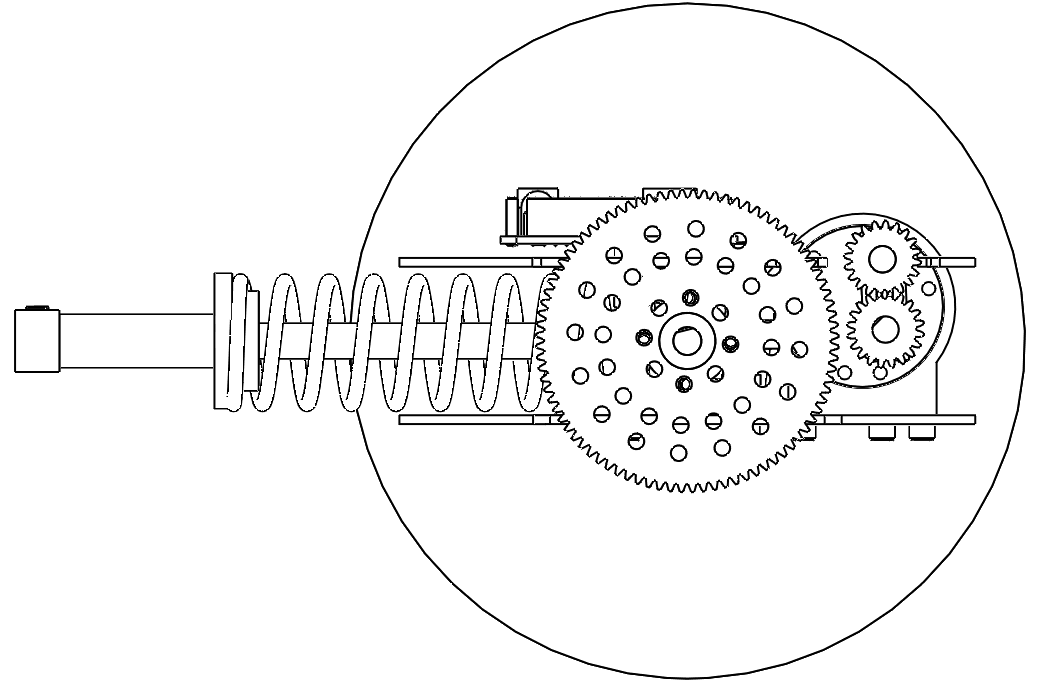

We designed a robot to complete a obstacle course that required a robot to travel and reach an goal. One of the major within the course was overcoming an approximately 1 meter tall obstacle within the center of the course. Given that this obstacle was known ahead of time, it was clear that the optimal way to cross to the other side was to go over it. This lead to the creation of our jumping robot. We designed all mechanical, electrical, software, and control systems from the ground up to work together to achieve this goal.

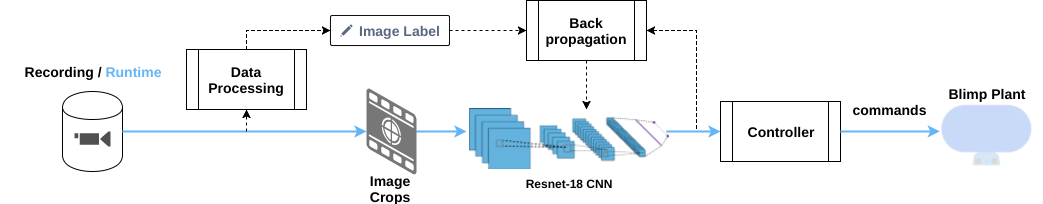

flōt Autonomous Blimp Robot

Flōt was my fourth year design project in undergrad (check out the project website for more information), where we explored the possibility of creating an autonomous blimp robot where it could navigate within a home and provide smart home assistance. The robot's payload provided significant technical challenges for the project severely limiting the selection of actuators, sensors, and on-board computation. We settled with a payload of a raspberry pi zero w, a propeller module, a sonar for height tracking and a camera. We explored variousmethods of learning to navigate within a complex home environment with the limited sensor payload,including: traditional methods such as mapping and detecting obstacles, as well as potential end-to-end learned methods like immitation learning, and deep RL.

Our implementation had to overcome several major obstacles, not limited to having a "real-time" controller and to limit as much as possible the amount of labeling and human involvement needed for its operation. These restrictions and in particular the desire for it to operate out of the box without having an explicit mapping directed us to the end-to-end approaches as part of our navigation stack.

Our first goal was to setup the training and evaluation code in simulation to enable rapid development. Many of the ideas that were used during this stage originally came from 'Learning to Fly by Crashing'. The training data that was used in this was collected by randomly sampling trajectories until crashing. A heuristic based labelling system was then applied to generate labels. At a high level, we took the intial part of the trajectories to be of the postive class, and the later part, just before crashing, to be of the negative class. We trained a CNN to attempt to reproduce these classifications. Overall, its reasonable to think of the network to be trying to output the probability of crashing if it were to follow a particular trajectory. After the intial rounds, we go back and collect more data from the failure cases and add them to our training set. This process is quite similar to the DAGGER algorithm.

Unfortunately, since our platform was slow moving this approach was not transferable to real life. We overcame this limitation by simulating the data collection technique in real life by manually moving a robot throughout a room and used distance measurements to determine possible collision scenarios for training. We manually filtered the data to remove label noise present from the limitations of the sonar sensor.

In the end we included amazon's alexa into the functionality of the robot allowing it to perform some basic functions like asking it to take a selfie.

Deep Q Networks

I reimplemented the deep Q-learning paper to play different atari games. I also incroporated various extensions such as the inclusion of multiple parallel agents and double Q-learning.

Meta learning - Ongoing

Within this ongoing project I am exploring recent works in the area of meta-learning by reimplementing some recent papers following CS330. Thus far I have gathered some results on the use of memory augmented neural networks for the purpose of classification. I am planning on incorporating concepts learned in my future research.